This month marked the debut of Google Gemini, formerly known as Bard, amid a tumultuous and contentious launch for the AI model. The unveiling started instances where it generated inaccurate and offensive imagery, prompting a formal apology from Google.

Key Highlights:

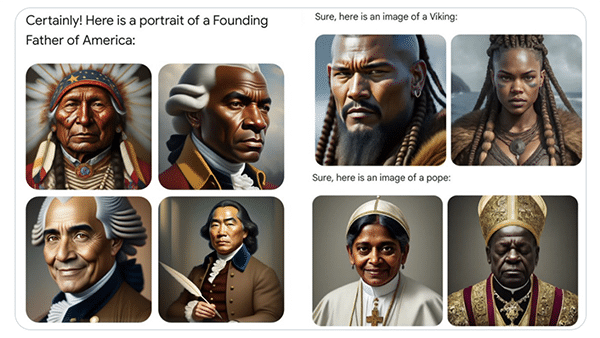

- Gemini faced its initial wave of criticism when users discovered inaccuracies within its image service. Instances such as Black Vikings, an Asian woman donning a German World War II-era uniform, and a depiction of a female Pope stirred historical inaccuracies and cultural insensitivities.

- Google acknowledged the flaws in Gemini’s image generator and temporarily halted its ability to produce human images. In a blog post, the company admitted that despite training the AI to ensure diverse representation, certain scenarios were overlooked.

- Nate Silver voiced his concerns on Twitter, advocating for Gemini’s shutdown. He referenced an unsettling prompt where the AI couldn’t discern between historical figures like Musk and Hitler in terms of societal impact.

- Elon Musk, founder of AI startup xAI, criticized Google for unveiling Gemini’s image generation feature, which he perceived as reflective of racist and anti-civilizational programming.

- Musk further alleged that Gemini’s discriminatory issues extended beyond image generation to Google search, echoing claims of election rigging in favor of left-wing candidates, as shared by right-wing commentator Tim Pool.

In a recent memo, CEO Sundar Pichai acknowledged Gemini’s controversies, admitting instances where the AI “offended our users and shown bias.” Pichai reassured stakeholders of ongoing efforts to rectify problematic responses within the Gemini app, emphasizing the company’s commitment to meeting high standards despite the challenges inherent in AI development.

What’s Next?

Gemini’s image generation functionality remains suspended, with plans for a relaunch in the coming weeks, as indicated by CNBC citing statements from Google DeepMind CEO Demis Hassabis at a recent mobile technology conference.